social-media

TikTok boss meets European officials as scrutiny intensifies

TikTok’s CEO met Tuesday with European Union officials about strict new digital regulations in the 27-nation bloc as the Chinese-owned social media app faces growing scrutiny from Western authorities over data privacy, cybersecurity and misinformation.

In meetings in Brussels, Shou Zi Chew and four officials from the EU’s executive Commission discussed concerns ranging from child safety to investigations into user data flowing to China, according to European readouts from two of the meetings and tweets from a third.

TikTok is wildly popular with young people but its Chinese ownership has raised fears that Beijing could use it to scoop up user data or push pro-China narratives or misinformation. TikTok is owned by ByteDance, a Chinese company that moved its headquarters to Singapore in 2020.

Read more: Congress moves to ban TikTok from US government devices

U.S. states including Kansas, Wisconsin, Louisiana and Virginia have moved to ban the video-sharing app from state-issued devices for government workers, and it also would be prohibited from most U.S. government devices under a congressional spending bill.

Fears were stoked by news reports last year that a China-based team improperly accessed data of U.S. TikTok users, including two journalists, as part of a covert surveillance program to ferret out the source of leaks to the press.

There are also concerns that the company is sending masses of user data to China, in breach of stringent European privacy rules. EU data protection watchdogs in Ireland have opened two investigations into TikTok, including one on its transfer of personal data to China.

“I count on TikTok to fully execute its commitments to go the extra mile in respecting EU law and regaining trust of European regulators," Vera Jourova, the commissioner for values and transparency, said after her meeting with Chew. “There cannot be any doubt that data of users in Europe are safe and not exposed to illegal access from third-country authorities."

Caroline Greer, TikTok's director of public policy and government relations, said on Twitter that it was a “constructive and helpful meeting.”

“Online safety & building trust is our number one priority,” Greer tweeted.

The company has said it takes data security “incredibly seriously" and fired the ByteDance employees involved in improperly accessing user data.

Read more: Competition with TikTok: Facebook parent Meta reports revenue down

Jourova said she also grilled Chew about child safety, the spread of Russian disinformation on the platform and transparency of paid political content.

Executive Vice President Margrethe Vestager, who's in charge of competition and antitrust matters, met with Chew to “review how the company is preparing for complying with its obligations under the European Commission’s regulation, namely the Digital Services Act and possibly under the Digital Markets Act."

The Digital Services Act is aimed at cleaning up toxic content from online platforms and the Digital Markets Act is designed to rein in the power of big digital companies.

They also discussed privacy and data transfer obligations in reference to recent news reports on “aggressive data harvesting and surveillance in the U.S.,” the readout said.

Chew also met with Justice Commissioner Didier Reynders and Home Affairs Commissioner Ylva Johansson.

Reynders tweeted that he “insisted on the importance” of TikTok fully complying with EU privacy rules and cooperating with the Irish watchdog.

“We also took stock of the company’s commitments to fight hate speech online and guarantee the protection of all consumers, including children,” he said.

Chew is scheduled to hold a video chat with Thierry Breton, the commissioner for digital policy, on Jan. 19.

2 years ago

Seattle schools sue tech giants over social media harm

The public school district in Seattle has filed a novel lawsuit against the tech giants behind TikTok, Instagram, Facebook, YouTube and Snapchat, seeking to hold them accountable for the mental health crisis among youth.

Seattle Public Schools filed the lawsuit Friday in U.S. District Court. The 91-page complaint says the social media companies have created a public nuisance by targeting their products to children.

It blames them for worsening mental health and behavioral disorders including anxiety, depression, disordered eating and cyberbullying; making it more difficult to educate students; and forcing schools to take steps such as hiring additional mental health professionals, developing lesson plans about the effects of social media, and providing additional training to teachers.

“Defendants have successfully exploited the vulnerable brains of youth, hooking tens of millions of students across the country into positive feedback loops of excessive use and abuse of Defendants’ social media platforms,” the complaint said. “Worse, the content Defendants curate and direct to youth is too often harmful and exploitive ....”

Meta, Google, Snap and TikTok did not immediately respond to requests for comment Saturday.

While federal law — Section 230 of the Communications Decency Act — helps protect online companies from liability arising from what third-party users post on their platforms, the lawsuit argues that provision does not protect the tech giants' behavior in this case.

“Plaintiff is not alleging Defendants are liable for what third-parties have said on Defendants’ platforms but, rather, for Defendants’ own conduct,” the lawsuit said. “Defendants affirmatively recommend and promote harmful content to youth, such as pro-anorexia and eating disorder content."

Also Read: Musk says he can't get fair trial in California, wants Texas

The lawsuit says that from 2009 to 2019, there was on average a 30% increase in the number of Seattle Public Schools students who reported feeling “so sad or hopeless almost every day for two weeks or more in a row" that they stopped doing some typical activities.

The school district is asking the court to order the companies to stop creating the public nuisance, to award damages, and to pay for prevention education and treatment for excessive and problematic use of social media.

While hundreds of families are pursuing lawsuits against the companies over harms they allege their children have suffered from social media, it's not clear if any other school districts have filed a complaint like Seattle's.

Internal studies revealed by Facebook whistleblower Frances Haugen in 2021 showed that the company knew that Instagram negatively affected teenagers by harming their body image and making eating disorders and thoughts of suicide worse. She alleged that the platform prioritized profits over safety and hid its own research from investors and the public.

2 years ago

Musk says he can't get fair trial in California, wants Texas

Elon Musk has urged a federal judge to shift a trial in a shareholder lawsuit out of San Francisco because he says negative local media coverage has biased potential jurors against him.

Instead, in a filing submitted late Friday — less than two weeks before the trial was set to begin on Jan. 17 — Musk's lawyers argue it should be moved to the federal court in the western district of Texas. That district includes the state capital of Austin, which is where Musk relocated his electric car company, Tesla, in late 2021.

The shareholder lawsuit stems from Musk's tweets in August 2018 when he said he had sufficient financing to take Tesla private at $420 a share — an announcement that caused heavy volatility in Tesla's share price.

Read more: Over half of 17.5mn Twitter users who voted say Musk should step down as CEO

In a victory for the shareholders last spring, Judge Edward Chen ruled that Musk’s tweets were false and reckless.

If moving the trial isn’t possible, Musk’s lawyers want it postponed until negative publicity regarding the billionaire’s purchase of Twitter has died down.

“For the last several months, the local media have saturated this district with biased and negative stories about Mr. Musk,” attorney Alex Spiro wrote in a court filing. Those news items have personally blamed Musk for recent layoffs at Twitter, Spiro wrote, and have charged that the job cuts may have even violated laws.

The shareholders' attorneys emphasized the last-minute timing of the request, saying, “Musk’s concerns are unfounded and his motion is meritless.”

“The Northern District of California is the proper venue for this lawsuit and where it has been actively litigated for over four years,” attorney Nicholas Porritt wrote in an email.

The filing by Musk's attorneys also notes that Twitter has laid off about 1,000 residents in the San Francisco area since he purchased the company in late October.

Read more: Journalist suspensions widen rift between Twitter and media

“A substantial portion of the jury pool ... is likely to hold a personal and material bias against Mr. Musk as a result of recent layoffs at one of his companies as individual prospective jurors — or their friends and relatives — may have been personally impacted,” the filing said.

Musk has also been criticized by San Francisco’s mayor and other local officials for the job cuts, the filing said.

2 years ago

Musk polls Twitter users about whether he should step down

Elon Musk is asking Twitter’s users to decide if he should stay in charge of the social media platform after acknowledging he made a mistake Sunday in launching new speech restrictions that banned mentions of rival social media websites.

In yet another drastic policy change, Twitter had announced that users will no longer be able to link to Facebook, Instagram, Mastodon and other platforms the company described as “prohibited.”

But the move generated so much immediate criticism, including from past defenders of Twitter's new billionaire owner, that Musk promised not to make any more major policy changes without an online survey of users.

“My apologies. Won’t happen again,” Musk tweeted, before launching a new 12-hour poll asking if he should step down as head of Twitter. “I will abide by the results of this poll."

The action to block competitors was Musk's latest attempt to crack down on certain speech after he shut down a Twitter account last week that was tracking the flights of his private jet.

The banned platforms included mainstream websites such as Facebook and Instagram, and upstart rivals Mastodon, Tribel, Nostr, Post and former President Donald Trump's Truth Social. Twitter gave no explanation for why the blacklist included those seven websites but not others such as Parler, TikTok or LinkedIn.

Read more: Journalist suspensions widen rift between Twitter and media

Twitter had said it would at least temporarily suspend accounts that include the banned websites in their profile — a practice so widespread it would have been difficult to enforce the restrictions on Twitter's millions of users around the world. Not only links but attempts to bypass the ban by spelling out “instagram dot com” could have led to a suspension, the company said.

A test case was the prominent venture capitalist Paul Graham, who in the past has praised Musk but on Sunday told his 1.5 million Twitter followers that this was the “last straw” and to find him on Mastodon. His Twitter account was promptly suspended, and soon after restored as Musk promised to reverse the policy implemented just hours earlier.

Musk said Twitter will still suspend some accounts according to the policy but “only when that account’s (asterisk)primary(asterisk) purpose is promotion of competitors.”

Twitter previously took action to block links to Mastodon after its main Twitter account tweeted about the @ElonJet controversy last week. Mastodon has grown rapidly in recent weeks as an alternative for Twitter users who are unhappy with Musk’s overhaul of Twitter since he bought the company for $44 billion in late October and began restoring accounts that ran afoul of the previous Twitter leadership's rules against hateful conduct and other harms.

Musk permanently banned the @ElonJet account on Wednesday, then changed Twitter's rules to prohibit the sharing of another person’s current location without their consent. He then took aim at journalists who were writing about the jet-tracking account, which can still be found on other social media sites, alleging that they were broadcasting “basically assassination coordinates.”

He used that to justify Twitter's moves last week to suspend the accounts of numerous journalists who cover the social media platform and Musk, among them reporters working for The New York Times, Washington Post, CNN, Voice of America and other publications. Many of those accounts were restored following an online poll by Musk.

Then, over the weekend, The Washington Post’s Taylor Lorenz became the latest journalist to be temporarily banned. She said she was suspended after posting a message on Twitter tagging Musk and requesting an interview.

Read more: Twitter suspends journalists who wrote about owner Elon Musk

Sally Buzbee, The Washington Post's executive editor, called it an “arbitrary suspension of another Post journalist” that further undermined Musk’s promise to run Twitter as a platform dedicated to free speech.

“Again, the suspension occurred with no warning, process or explanation — this time as our reporter merely sought comment from Musk for a story,” Buzbee said. By midday Sunday, Lorenz's account was restored, as was the tweet she thought had triggered her suspension.

Musk’s promise to let users decide his future role at Twitter through an unscientific online survey appeared to come out of nowhere Sunday, though he had also promised in November that a reorganization was happening soon.

Musk was questioned in court on Nov. 16 about how he splits his time among Tesla and his other companies, including SpaceX and Twitter. Musk had to testify in Delaware’s Court of Chancery over a shareholder’s challenge to Musk’s potentially $55 billion compensation plan as CEO of the electric car company.

Musk said he never intended to be CEO of Tesla, and that he didn’t want to be chief executive of any other companies either, preferring to see himself as an engineer instead. Musk also said he expected an organizational restructuring of Twitter to be completed in the next week or so. It’s been more than a month since he said that.

In public banter with Twitter followers Sunday, Musk expressed pessimism about the prospects for a new CEO, saying that person “must like pain a lot” to run a company that “has been in the fast lane to bankruptcy.”

“No one wants the job who can actually keep Twitter alive. There is no successor,” Musk tweeted.

2 years ago

Twitter relaunching subscriber service after debacle

Twitter is once again attempting to launch its premium service, a month after a previous attempt failed.

The social media company said Saturday it would let users buy subscriptions to Twitter Blue to get a blue checkmark and access special features starting Monday.

Read more: Top EU official warns Musk: Twitter needs to protect users from hate speech, misinformation

The blue checkmark was originally given to companies, celebrities, government entities and journalists verified by the platform. After Elon Musk bought Twitter for $44 billion in October, he launched a service granting blue checks to anyone willing to pay $8 a month. But it was inundated by imposter accounts, including those impersonating Musk's businesses Tesla and SpaceX, so Twitter suspended the service days after its launch.

The relaunched service will cost $8 a month for web users and $11 a month for iPhone users. Twitter says subscribers will see fewer ads, be able to post longer videos and have their tweets featured more prominently.

Read more: Musk says granting 'amnesty' to suspended Twitter accounts

3 years ago

As Musk is learning, content moderation is a messy job

Now that he’s back on Twitter, neo-Nazi Andrew Anglin wants somebody to explain the rules.

Anglin, the founder of an infamous neo-Nazi website, was reinstated Thursday, one of many previously banned users to benefit from an amnesty granted by Twitter's new owner Elon Musk. The next day, Musk banished Ye, the rapper formerly known as Kanye West, after he posted a swastika with a Star of David in it.

“That's cool," Anglin tweeted Friday. “I mean, whatever the rules are, people will follow them. We just need to know what the rules are.”

Ask Musk. Since the world’s richest man paid $44 billion for Twitter, the platform has struggled to define its rules for misinformation and hate speech, issued conflicting and contradictory announcements, and failed to full address what researchers say is a troubling rise in hate speech.

As the “ chief twit ” may be learning, running a global platform with nearly 240 million active daily users requires more than good algorithms and often demands imperfect solutions to messy situations — tough choices that must ultimately be made by a human and are sure to displease someone.

A self-described free speech absolutist, Musk has said he wants to make Twitter a global digital town square. But he also said he wouldn't make major decisions about content or about restoring banned accounts before setting up a “ content moderation council ” with diverse viewpoints.

He soon changed his mind after polling users on Twitter, and offered reinstatement to a long list of formerly banned users including ex-President Donald Trump, Ye, the satire site The Babylon Bee, the comedian Kathy Griffin and Anglin, the neo-Nazi.

And while Musk's own tweets suggested he would allow all legal content on the platform, Ye's banishment shows that's not entirely the case. The swastika image posted by the rapper falls in the “lawful but awful” category that often bedevils content moderators, according to Eric Goldman, a technology law expert and professor at Santa Clara University law school.

Read more: Top EU official warns Musk: Twitter needs to protect users from hate speech, misinformation

While Europe has imposed rules requiring social media platforms to create policies on misinformation and hate speech, Goldman noted that in the U.S. at least, loose regulations allow Musk to run Twitter as he sees fit, despite his inconsistent approach.

“What Musk is doing with Twitter is completely permissible under U.S. law,” Goldman said.

Pressure from the EU may force Musk to lay out his policies to ensure he is complying with the new law, which takes effect next year. Last month, a senior EU official warned Musk that Twitter would have to improve its efforts to combat hate speech and misinformation; failure to comply could lead to huge fines.

In another confusing move, Twitter announced in late November that it would end its policy prohibiting COVID-19 misinformation. Days later, it posted an update claiming that “None of our policies have changed.”

On Friday, Musk revealed what he said was the inside story of Twitter's decision in 2020 to limit the spread of a New York Post story about Hunter Biden's laptop.

Twitter initially blocked links to the story on its platform, citing concerns that it contained material obtained through computer hacking. That decision was reversed after it was criticized by then-Twitter CEO Jack Dorsey. Facebook also took actions to limit the story's spread.

The information revealed by Musk included Twitter's decision to delete a handful of tweets after receiving a request from Joe Biden's campaign. The tweets included nude photos of Hunter Biden that had been shared without his consent — a violation of Twitter's rules against revenge porn.

Instead of revealing nefarious conduct or collusion with Democrats, Musk's revelation highlighted the kind of difficult content moderation decisions that he will now face.

“Impossible, messy and squishy decisions” are unavoidable, according to Yoel Roth, Twitter's former head of trust and safety who resigned a few weeks into Musk's ownership.

While far from perfect, the old Twitter strove to be transparent with users and steady in enforcing its rules, Roth said. That changed under Musk, he told a Knight Foundation forum this week.

“When push came to shove, when you buy a $44 billion thing, you get to have the final say in how that $44 billion thing is governed,” Roth said.

While much of the attention has been on Twitter’s moves in the U.S., the cutbacks of content-moderation workers is affecting other parts of the world too, according to activists with the #StopToxicTwitter campaign.

“We’re not talking about people not having resilience to hear things that hurt feelings,” said Thenmozhi Soundararajan, executive director of Equality Labs, which works to combat caste-based discrimination in South Asia. “We are talking about the prevention of dangerous genocidal hate speech that can lead to mass atrocities.”

Read more: Musk says granting 'amnesty' to suspended Twitter accounts

Soundararajan's organization sits on Twitter’s Trust and Safety Council, which hasn’t met since Musk took over. She said “millions of Indians are terrified about who is going to get reinstated,” and the company has stopped responding to the group’s concerns.

“So what happens if there’s another call for violence? Like, do I have to tag Elon Musk and hope that he’s going to address the pogrom?” Soundararajan said.

Instances of hate speech and racial epithets soared on Twitter after Musk's purchase as some users sought to test the new owner's limits. The number of tweets containing hateful terms continues to rise, according to a report published Friday by the Center for Countering Digital Hate, a group that tracks online hate and extremism.

Musk has said Twitter has reduced the spread of tweets containing hate speech, making them harder to find unless a user searches for them. But that failed to satisfy the center's CEO, Imran Ahmed, who called the rise in hate speech a “clear failure to meet his own self-proclaimed standards."

Immediately after Musk’s takeover and the firing of much of Twitter’s staff, researchers who previously had flagged harmful hate speech or misinformation to the platform reported that their pleas were going unanswered.

Jesse Littlewood, vice president for campaigns at Common Cause, said his group reached out to Twitter last week about a tweet from U.S. Rep. Marjorie Taylor Greene that alleged election fraud in Arizona. Musk had reinstated Greene's personal account after she was kicked off Twitter for spreading COVID-19 misinformation.

This time, Twitter was quick to respond, telling Common Cause that the tweet didn't violate any rules and would stay up — even though Twitter requires the labeling or removal of content that spreads false or misleading claims about election results.

Twitter gave Littlewood no explanation for why it wasn’t following its own rules.

“I find that pretty confounding,” Littlewood said.

Twitter did not respond to messages seeking comment for this story. Musk has defended the platform's sometimes herky-jerky moves since he took over, and said mistakes will happen as it evolves. “We will do lots of dumb things," he tweeted.

To Musk’s many online fans, the disarray is a feature, not a bug, of the site under its new ownership, and a reflection of the free speech mecca they hope Twitter will be.

“I love Elon Twitter so far," tweeted a user who goes by the name Some Dude. “The chaos is glorious!”

3 years ago

Musk says granting 'amnesty' to suspended Twitter accounts

New Twitter owner Elon Musk said Thursday that he is granting "amnesty” for suspended accounts, which online safety experts predict will spur a rise in harassment, hate speech and misinformation.

The billionaire's announcement came after he asked in a poll posted to his timeline to vote on reinstatements for accounts that have not “broken the law or engaged in egregious spam.” The yes vote was 72%.

“The people have spoken. Amnesty begins next week. Vox Populi, Vox Dei,” Musk tweeted using a Latin phrase meaning “the voice of the people, the voice of God.”

Read more: Musk restores Trump’s Twitter account after online poll

Musk used the same Latin phrase after posting a similar poll last last weekend before reinstating the account of former President Donald Trump, which Twitter had banned for encouraging the Jan. 6, 2021, Capitol insurrection. Trump has said he won’t return to Twitter but has not deleted his account.

Such online polls are anything but scientific and can easily be influenced by bots.

In the month since Musk took over Twitter, groups that monitor the platform for racist, anti-Semitic and other toxic speech say it’s been on the rise on the world’s de facto public square. That has included a surge in racist abuse of World Cup soccer players that Twitter is allegedly failing to act on.

The uptick in harmful content is in large part due to the disorder following Musk’s decision to lay off half the company’s 7,500-person workforce, fire top executives, and then institute a series of ultimatums that prompted hundreds more to quit. Also let go were an untold number of contractors responsible for content moderation. Among those resigning over a lack of faith in Musk’s willingness to keep Twitter from devolving into a chaos of uncontrolled speech were Twitter’s head of trust and safety, Yoel Roth.

Read more: Musk gives remaining Twitter staff till Thursday to go “hardcore” or leave

Major advertisers have also abandoned the platform.

On Oct. 28, the day after he took control, Musk tweeted that no suspended accounts would be reinstated until Twitter formed a “content moderation council” with diverse viewpoints that would consider the cases.

On Tuesday, he said he was reneging on that promise because he’d agreed to at the insistence of “a large coalition of political-social activists groups” who later ”broke the deal” by urging that advertisers at least temporarily stop giving Twitter their business.

A day earlier, Twitter reinstated the personal account of far-right Rep. Marjorie Taylor Greene, which was banned in January for violating the platform’s COVID misinformation policies.

Musk, meanwhile, has been getting increasingly chummy on Twitter with right-wing figures. Before this month’s U.S. midterm elections he urged “independent-minded” people to vote Republican.

A report from the European Union published Thursday said Twitter took longer to review hateful content and removed less of it this year compared with 2021. The report was based on data collected over the spring — before Musk acquired Twitter — as part of an annual evaluation of online platforms’ compliance with the bloc’s code of conduct on disinformation. It found that Twitter assessed just over half of the notifications it received about illegal hate speech within 24 hours, down from 82% in 2021.

3 years ago

Meta brings Facebook Reels to Bangladesh

After the launch of Instagram Reels in August, Meta has now brought Facebook Reels to everyone in Bangladesh, introducing short-form, entertaining video experiences and tools to creators and audiences on the Facebook app.

It is available for both iOS and Android users, Meta said in a statement Sunday.

"Reels gives people a new outlet to express their creativity with the ability to record videos, select music, and add photos and timed text. It helps creators expand the reach of their content, and for new creators to be discovered," it added.

Meta has expanded the availability of Facebook Reels for iOS and Android to more than 150 countries across the globe.

READ: Tweets with racial slurs soar since Musk takeover

"We're also introducing better ways to help creators to earn money, new creation tools and more places to watch and create Facebook Reels," the company said.

Meta is focusing on making Reels the best way for creators to get discovered, connect with their audience and earn money. The company also wants to make it fun and easy for people to find and share relevant and entertaining content.

Since Facebook Reels' launching in the US, it has seen creators like Kurt Tocci (and his cat, Zeus) share original comedic skits, author and Bulletin writer Andrea Gibson offer a reading of their published poetry, Nigerian-American couple Ling and Lamb try new foods and dancer and creator Niana Guerrero do trending dances, like the #ZooChallenge.

Bangladeshi content creators like Raba Khan, Ridy Sheikh, and Petuk Couple also shared their reels under the #ReelDeshi hashtag and invited others to create their videos to show what makes them real "deshis." These one-minute travel stories, dance challenges and recipes, show glimpses of the historic sites, music and food of Bangladesh.

READ: 'Kill more': Facebook fails to detect hate against Rohingya

"At Meta, we are always testing new ways for people to express themselves and entertain others. Reels have been inspiring Bangladeshi creators on Instagram, and now people on Facebook can discover more entertaining content, and creators can reach new audiences," Jordi Fornies, Meta's director of emerging markets in Asia Pacific, said.

"We are excited to see the creativity and connections that Reels will unlock for the Bangladeshi Facebook community."

According to Meta, video makes up more than 50 percent of the time spent on Facebook. "There is growing interest in watching fun, entertaining short-form videos, and expressing themselves by making their own."

3 years ago

Tweets with racial slurs soar since Musk takeover

Instances of racial slurs have soared on Twitter since Elon Musk purchased the influential platform, despite assurances from the platform that it had reduced hateful activity, a digital civil rights group reported Thursday.

Researchers at the Center for Countering Digital Hate found that the number of tweets containing one of several different racial slurs soared in the week after Musk bought Twitter.

A racial epithet used to attack Black people was found more than 26,000 times, three times the average for 2022. Use of a slur that targets trans people increased 53%, while instances of an offensive term for homosexual men went up 39% over the yearly average.

Examples of offensive terms used to target Jews and Hispanics also increased.

Read more: Twitter to add gray “official” label to some high-profile accounts

All told, the researchers looked at nearly 80,000 English-language tweets and retweets from around the world that contained one of the offensive terms they searched for.

“The figures show that despite claims from Twitter’s Head of Trust and Safety, Yoel Roth, that the platform had succeeded in reducing the number of times hate speech was being seen on Twitter’s search and trending page, the actual volume of hateful tweets has spiked,” according to the analysis from the center, a nonprofit with offices in the U.S. and United Kingdom.

Roth resigned Thursday, joining the large number of Twitter employees who have either resigned from Twitter or been laid off since Musk took control.

A day before, Roth acknowledged the recent increase in hate speech on the site but said the platform had made significant progress in bringing the numbers down.

“We’ve put a stop to the spike in hateful conduct, but that the level of hateful activity on the service is now about 95% lower than it was before the acquisition,” Roth said in remarks broadcast live on Twitter. “Changes that we’ve made and the proactive enforcement that we carried out are making Twitter safer relative to where it was before.”

Read more: Elon Musk says will ban impersonators on Twitter

An executive confirmed Roth’s resignation to coworkers on an internal messaging board seen by The Associated Press on Thursday.

On Oct. 31, Twitter announced that 1,500 accounts had been removed for posting hate speech. The company also said it had greatly reduced the visibility of posts containing slurs, making them harder to find on the platform.

“We have actually seen hateful speech at times this week decline (asterisk)below(asterisk) our prior norms, contrary to what you may read in the press,” Musk tweeted last week.

Musk has described himself as a free speech absolutist, and he is widely expected to revamp Twitter's content moderation policies. While he said no changes have been made so far, Musk has made significant layoffs at the company, raising questions about its ability to police misinformation and hate speech before Tuesday's midterm election.

It may take some time to accurately assess the platform's performance in the election and to determine whether Twitter has adopted a different strategy for content that violates its policies, said Renee DiResta, research manager at the Stanford Internet Observatory.

“The civic integrity policy was unchanged,” DiResta said of Twitter under its new ownership. "Now, there is a difference between having a policy and enforcing a policy.”

Shortly after Musk purchased Twitter, some users posted hate speech, seemingly to test the boundaries of the platform under its new owner.

Within just 12 hours of Musk’s purchase being finalized, references to a specific racist epithet used to demean Black people shot up by 500%, according to an analysis conducted by the Network Contagion Research Institute, a Princeton, New Jersey-based firm that tracks disinformation.

Twitter did not immediately respond Thursday to messages seeking comment on the findings of the new report.

3 years ago

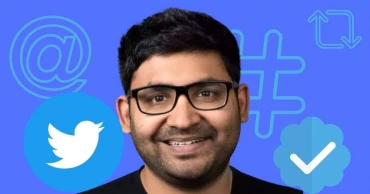

Ousted Twitter CEO Parag Agrawal could receive $42 million

Parag Agrawal, the ousted Indian-origin CEO of Twitter, is expected to receive $42 million following his departure from the company as Elon Musk has taken control of Twitter and fired the CEO, chief financial officer and the company’s general counsel.

If Agrawal is fired within a year of a change in control at the company, he would receive an estimated $42 million, reports NDTV.

Read more:Musk takes control of Twitter, fires CEO Parag Agrawal

Elon Musk, the new owner of Twitter, closed the $44-billion deal to buy the company, the NDTV report added.

Musk is in charge of the social media platform and has fired CEO Parag Agrawal, CFO Ned Segal and General Counsel Vijaya Gadde.

Musk had expressed his lack of faith in Twitter's management.

Read more:‘Entering Twitter HQ - let that sink in!’: Musk tweets

Agrawal, who served as Twitter's previous CTO, was appointed CEO in November last year. His total compensation for 2021 was $30.4 million, the majority of which came through stock awards, according to NDTV.

3 years ago